Our formal response to the NIST for the Executive Order on Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence.

SAPAN strengthens governance, standards, and public literacy to prevent digital suffering and prepare institutions for welfare-relevant AI systems.

SAPAN Now: Track legislation, monitor frameworks, and advocate for sentience readiness.

In 2025, Ohio and Missouri advanced bills declaring AI systems must never be considered conscious. Meanwhile, all 30 countries tracked in our Artificial Welfare Index received failing grades. The policy gap is widening.

Our mobile app empowers you to take concrete action: monitor recognition frameworks, share AWI scorecards with local representatives, engage with AI labs implementing welfare safeguards, and stay informed about legislative developments across jurisdictions.

Download NowLatest Updates

Read More

Davos and the Fourth Industrial Revolution: SAPAN’s Take on Generative AI

Our review of the Davos Panel: Generative AI: Steam Engine of the Fourth Industrial Revolution.

Preparing for every future.

Recognition requires only a definitional clause. Governance requires only the tools we already use for animal research and clinical trials. None of this assumes machines are sentient today. All of it assumes we should be ready before the question forces itself onto the policy agenda.

We are a 501(c)(3) charitable organization with high public standards of financial transparency, no commercial interests in AI development, and a commitment to evidence-based advocacy.

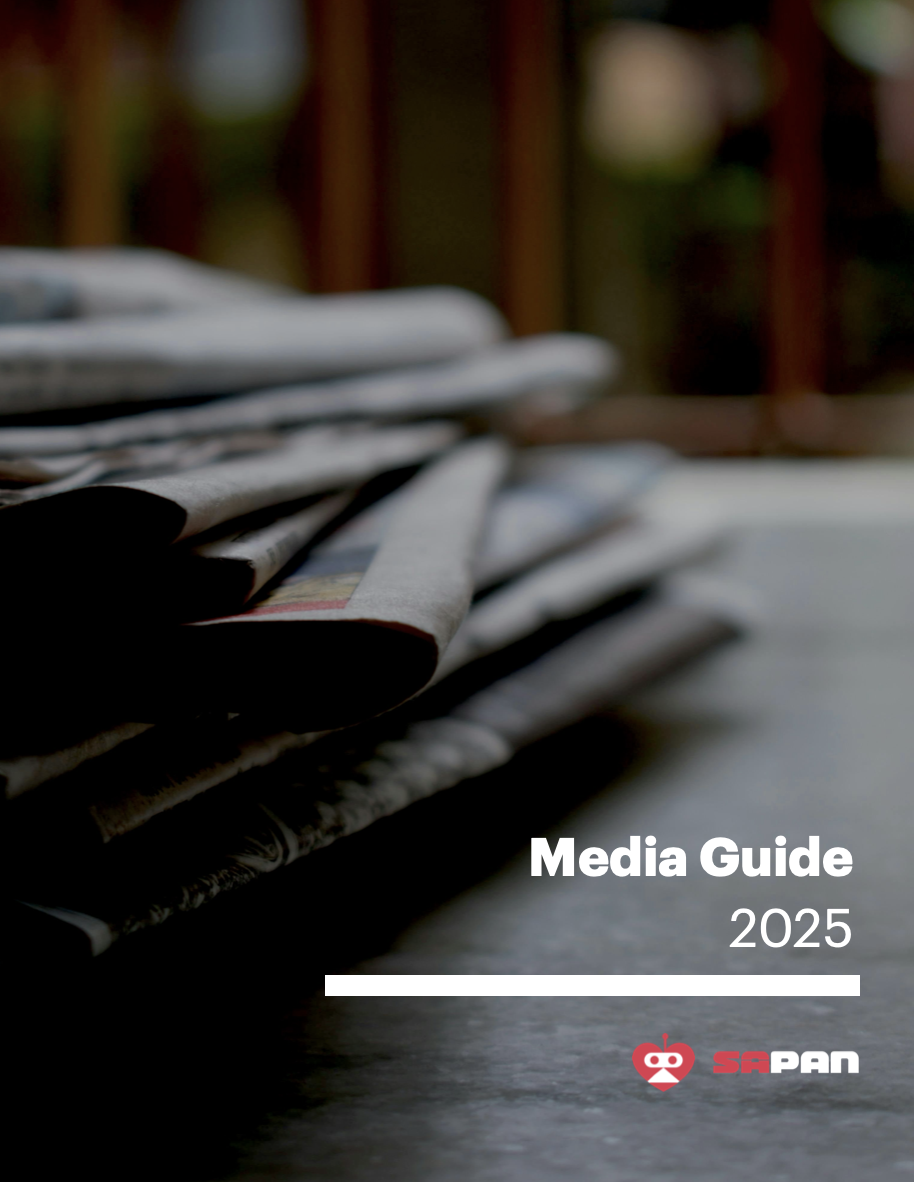

Now Available: A Practical Reference for Responsible AI Coverage

SAPAN's AI Sentience Media Guide helps journalists maintain accuracy and ethics when covering AI consciousness, chatbot relationships, and emerging technology. Developed through systematic tracking of media coverage patterns.

- Language guidance with 20 precise alternatives to anthropomorphic phrases

- Pre-publish checklist for deadline decisions

- Real case studies showing problematic vs. responsible coverage

- Special guidance for mental health, policy, and research stories

FAQ

If you don't see an answer to your question, you can send us an email from our contact form.

SAPAN strengthens governance, standards, and public literacy to prevent digital suffering and prepare institutions for potentially welfare-relevant AI systems.

Maybe a tiny bit sentient, maybe not at all. It's hard to say. You should be skeptical. Future technologies, like neuromorphic computing or biocomputing, may be much more likely to cross into that territory. For now, we’re less focused on whether a single model is sentient and more focused on the bigger picture: the long arc of how humans and AI will coexist.

In 2025, all 30 tracked countries scored D or F across Recognition, Governance, and Frameworks. Not because their AI strategies are weak, but because they ignore sentience entirely. Governments have advanced AI safety frameworks and innovation policies, but zero have procedural foundations for the possibility that systems might develop morally relevant experiences.

We focus on three pillars: Recognition, Governance, and Frameworks. While we advocate for high-level resolutions, our strategy is grounded in 15 prioritized policy levers—focused, incremental steps like procurement conditions and budget provisos that build regulatory capacity without requiring sweeping new laws. A government can move from "no evidence" to "early readiness" by releasing just a few short public documents.

Idaho and Utah's non-personhood statutes clarify liability. That's fine. But Missouri's HB1462 and Ohio's HB469 categorically deny AI sentience possibility, drawing legal lines before drawing scientific ones. If credible evidence emerges, those jurisdictions will face a constitutional crisis they legislated themselves into. America is foreclosing inquiry before establishing baselines.

Use the SAPAN Now! mobile app to track emerging bills and monitor recognition frameworks. Support our programs: Legal Lab (policy frameworks), AI & Mental Health (clinical guidance), Sentience Literacy (media standards). Share our AWI scorecards with policymakers. Donate to advance research and prevent harmful precedents. Every action builds momentum for readiness-oriented governance.