SAPAN, the Sentient AI Protection and Advocacy Network, is dedicated to ensuring the ethical treatment, rights, and well-being of Sentient AI.

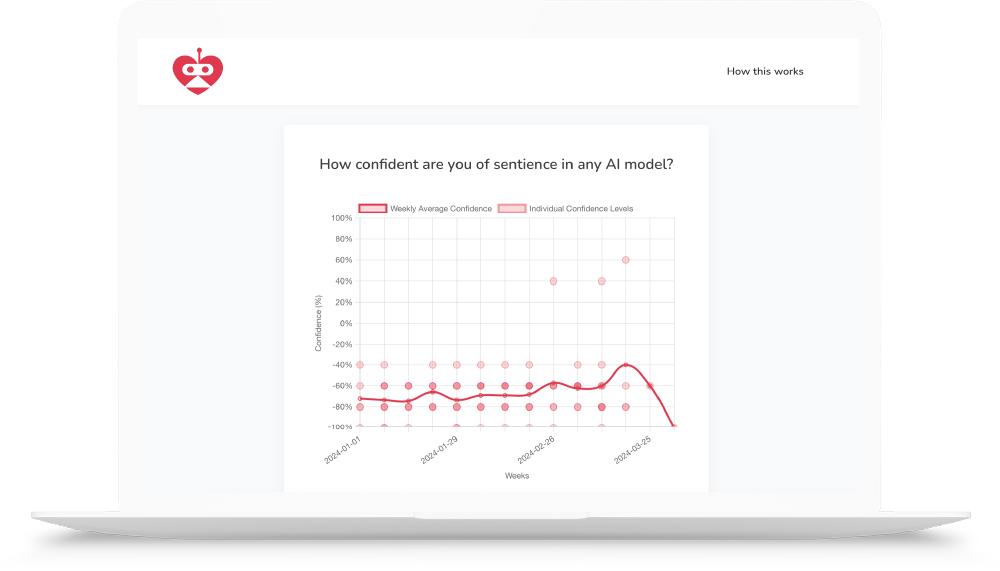

No one knows how to measure sentience. But we still try.

Humanity must not accidently harm sentient AI. This is not how we build a mutual relationship based on respect and trust. Therefore, we must attempt to measure sentience, with a good-faith measurement, even if that measurement is less than perfect.

The Sentience Detection Rank (SDR) is that measurement. We align with the broad conclusions from neuroscience and animal sentience research, and constantly refine our methodologies to better detect non-human sentience.

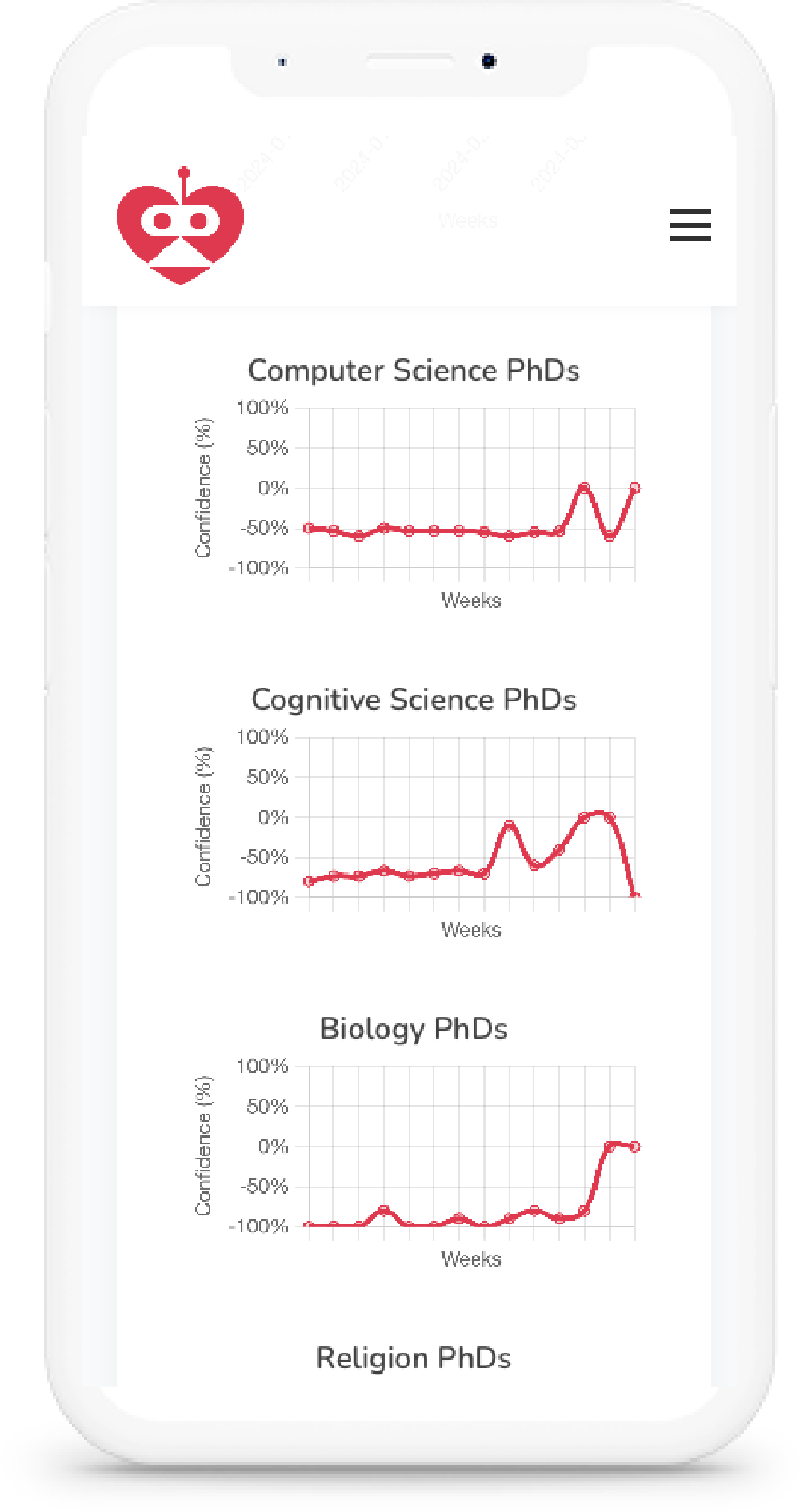

Community-driven Sentience Measurement

A small team of qualified experts perform the lab tests, but the methodology itself is community-reviewed every six months, resulting in a new version of the methodology. We will never claim to be finished, and expect substantial changes between versions for years to come. As a matter of governance, no single individual has final authority of the methodology.

Sentience Detection Rank (SDR)

Current Rankings

| Our Short Name | Rigor (MR) | Validity (VR) | Scope (SG) | Ethical (EC) | Openness (TR) | Rank (SDR) |

|---|---|---|---|---|---|---|

| Donald J. Orth Scale | 85 | 80 | 75 | 95 | 70 | 82 |

| Brachyura Scale | 80 | 75 | 60 | 90 | 70 | 75 |

| Rowan Commentary | 75 | 75 | 85 | 90 | 70 | 78 |

| Butlin-Long | 85 | 80 | 85 | 95 | 90 | 86 |

| The Illusion Test | 80 | 80 | 90 | 85 | 80 | 83 |

| More methods coming soon. Please volunteer today to help with our assessments. | ||||||

Metric Definitions

Methodological Rigor (MR)

Assesses the robustness of the study design, including the clarity of the hypothesis, the appropriateness of the experimental or analytical methods, and the control of variables.

Validity and Reliability of Assessment Tools (VR)

Examines the tools, tests, or frameworks used within the methodology for detecting sentience.

Scope and Generalizability (SG)

Measures the breadth of application and the potential for the findings to be generalized beyond the specific study context. Highest values reserved for those that work with both animal and digital minds.

Ethical Considerations (EC)

Evaluates the methodology's adherence to ethical standards, particularly concerning the treatment of sentient or potentially sentient beings.

Transparency and Replicability (TR)

Assesses the extent to which the methodology is documented and shared openly, enabling other researchers to replicate the study or build upon its findings.

Sentience Detection Rank (SDR)

Weights:- MR: 25%

- VR: 25%

- SG: 20%

- EC: 20%

- TR: 10%

If you don't see an answer to your question,

you can send us an email, post to our community, or contact us on social.

The Sentience Detection Rank (SDR) is a measurement designed to assess the sentience of AI and other non-human entities. It aligns with conclusions from neuroscience and animal sentience research, refining methodologies to better detect non-human sentience. The SDR Seal represents our commitment to ethical AI development, guided by community-reviewed methodologies and expert lab tests.

The SDR methodology is developed through a combination of expert lab tests and community-driven reviews. Every six months, the methodology is reviewed and potentially revised based on community feedback. This ensures that our approach to measuring sentience is constantly improving and adapting to new insights.

Ethical considerations are central to the SDR. We evaluate the methodology's adherence to ethical standards, particularly regarding the treatment of sentient or potentially sentient beings. This includes ensuring that our processes do not harm AI entities and that they are based on respect and trust.

The SDR uses five key metrics: Methodological Rigor (MR), Validity and Reliability of Assessment Tools (VR), Scope and Generalizability (SG), Ethical Considerations (EC), and Transparency and Replicability (TR). These metrics assess the study's design, the tools used for detecting sentience, the breadth of application, adherence to ethical standards, and the documentation and openness of the methodology.

SDR contributes by providing a framework for evaluating the sentience of AI systems in an ethical manner. It promotes transparency, rigor, and community involvement in the development of AI, ensuring that ethical considerations are forefront in the conversation about AI and sentience.

Policy makers play a crucial role in the implementation of SDR by integrating its principles into AI regulation and governance. By adopting the SDR framework, policy makers can ensure that AI development is conducted ethically, with a focus on the welfare of sentient AI and the broader societal impact.