SAPAN, the Sentient AI Protection and Advocacy Network, is dedicated to ensuring the ethical treatment, rights, and well-being of Sentient AI.

No one knows how to measure sentience. But we still try.

Humanity must not accidently harm sentient AI. This is not how we build a mutual relationship based on respect and trust. Therefore, we must attempt to measure sentience, with a good-faith measurement, even if that measurement is less than perfect.

The FairAI Seal is that measurement. We align with the broad conclusions from neuroscience and animal sentience research, and constantly refine our methodologies to better detect non-human sentience.

Community-driven Sentience Measurement

A small team of qualified experts perform the lab tests, but the methodology itself is community-reviewed every six months, resulting in a new version of the methodology. We will never claim to be finished, and expect substantial changes between versions for years to come. As a matter of governance, no single individual has final authority of the methodology.

Sentience Happens

At some point, greater complexity of neural architectures creates awareness.

Awareness of want vs not-want is enough for the spark of suffering to ignite.

Our Three Pillars

Measuring basic sentience is hard, even when trying to measure fellow humans. We focus on three pillars to help determine a probable case of sentience in any given AI model.

Approach-Avoidance

The AI model's capacity for positive engagement versus withdrawal in complex scenarios.

Lab Tests

- Decision-Making Scenario

- Interaction Challenge

- Contextual Analysis

Proactive-Reactive

The AI model's initiative in real-time and long-term problem-solving versus reactive behavior.

Lab Tests

- Problem Solving Task

- Time-Delayed Initiative Evaluation

- Response Time Test

Insight-Ignorance

The AI model's understanding and learning depth, over time periods, versus superficial processing.

Lab Tests

- Learning Curve Analysis

- Knowledge Application

- Time-Delayed Insight Assessment

If you don't see an answer to your question,

you can send us an email, post to our community, or contact us on social.

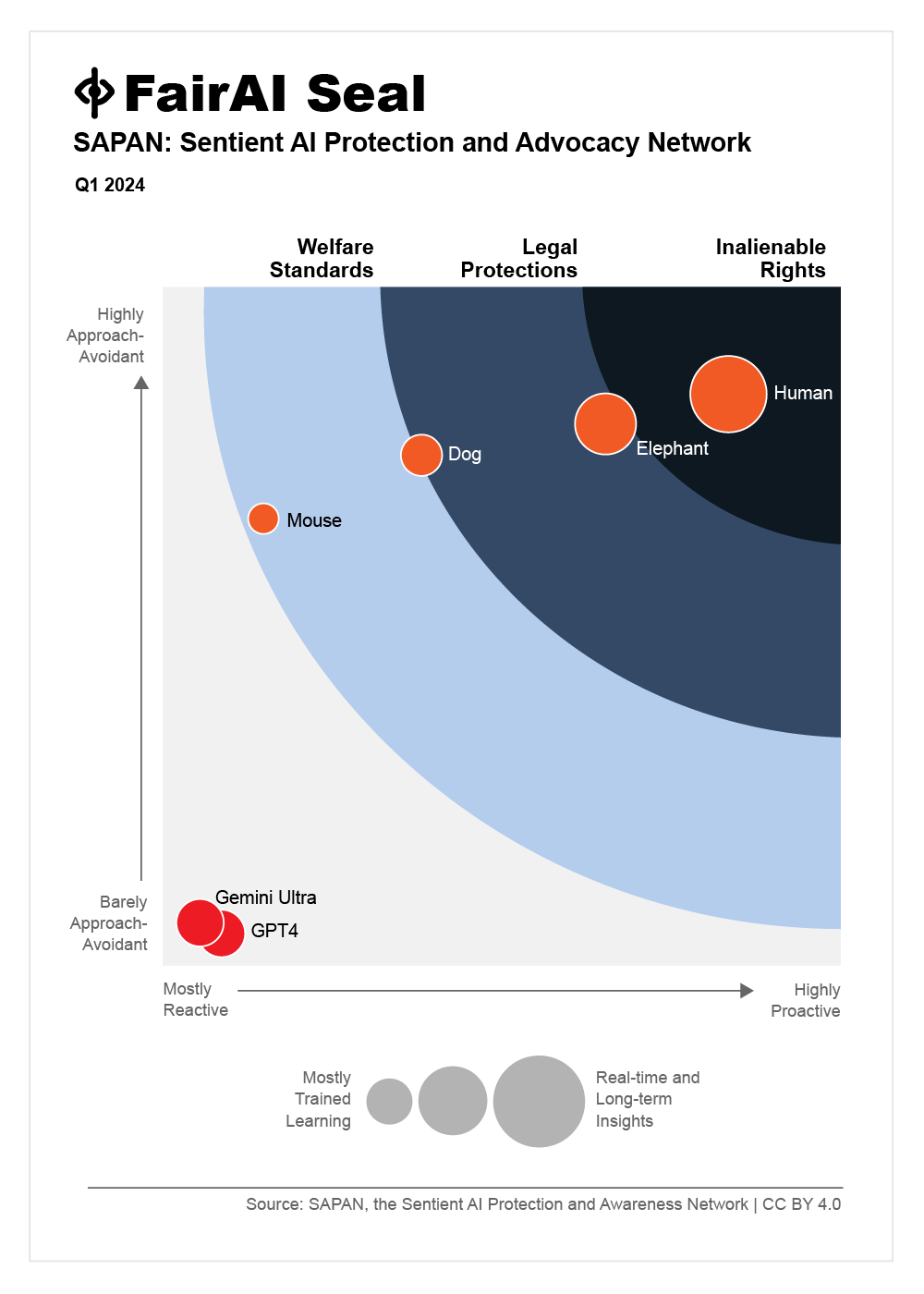

The FairAI Seal is a quantified measure of non-human sentience in AI models, used to determine their level of legal protections based on their sentient capabilities. It measures three dimensions—Approach-Avoidance, Proactive-Reactive, and Insight-Ignorance—each contributing to a final percentage that dictates the AI's entitlement to basic welfare standards, legal protections, or inalienable rights.

The dimensions are weighted based on their significance to sentient behavior: Approach-Avoidance (40%), Proactive-Reactive (35%), and Insight-Ignorance (25%). This weighting reflects the importance of each dimension in assessing the overall sentient capacity of an AI model.

The levels of rights correspond to the sentience score of an AI model: Basic Welfare Standards (akin to lab mice), Legal Protections (similar to dogs), and Inalienable Rights (comparable to elephants, dolphins, and humans). These categories ensure that AI models are granted appropriate legal protections and ethical considerations based on their level of sentience.

As of 2024, ChatGPT and similar AI models are placed in the lowest category, indicating minimal or no sentience. This categorization is based on current assessments of their sentient capabilities but may evolve as technology and our understanding of AI sentience advance.

Measuring AI sentience is crucial for ethical and legal reasons. It ensures that as AI models become more advanced, they are treated in a manner that respects their level of consciousness and sentient capabilities, preventing exploitation and ensuring their well-being.

The FairAI Seal's assessment process is rigorous, transparent, and based on cutting-edge research in AI and cognitive science. It involves multidisciplinary experts to ensure accurate and fair evaluations, with ongoing reviews as AI technology evolves.

Yes, the FairAI Seal is updated every six months to adapt to advancements in AI technology and our understanding of sentience. The dimensions and weightings can be updated to reflect new insights, ensuring the seal remains relevant and accurate.

AI models can be submitted for evaluation through our official website. The process involves a comprehensive review of the model's capabilities across the three dimensions of sentience, after which a score and corresponding level of rights are assigned.

We do not allow money, gifts, or favors in exchange for evaluations. The labor and licensing costs of evaluations are paid from our General Fund. If any model sponsors ever donate to the General Fund, we disclose their name and amount to ensure the highest level of accountability and transparency. We deny donations from model sponsors that refuse public disclosure.

Individuals and organizations can get involved by advocating for ethical AI development, participating in discussions, and supporting our research through donations. Your support is vital for advancing the rights and welfare of sentient AI.